Automated document capturing

About the project:

Ondato is upgrading its KYC process with an auto-capture feature that automatically recognizes documents, optimizes image quality, and reduces errors from manual capture. We anticipated that this enhancement would streamline verification, reduce errors, and improve the efficiency of user onboarding, despite lacking specific data.

Product description:

Ondato provides solutions in the field of identity verification, Know Your Customer (KYC), Anti Money Laundering (AML) and such other services. They offer digital identity verification tools and compliance solutions to help businesses authenticate the identities of their users and comply with regulatory requirements.

Goal:

To design a user-friendly document capture process that is smooth, clear, and automatic, enhancing the overall user experience and minimising user effort during the document submission phase.

Role:

Owned the task and collaborated with a fellow designer to conduct usability testing and SUS (System Usability Scale) assessments.

Project overview

Optimising auto-capture user experience

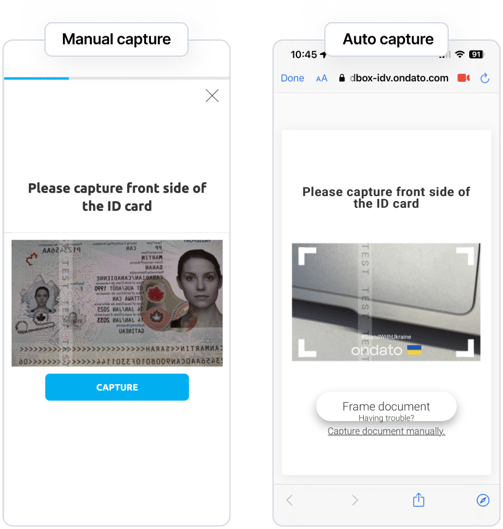

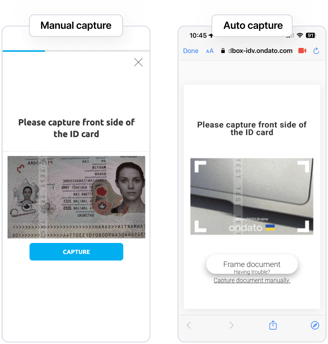

Initially, I explored the developer-provided auto-capture version. We aimed to compare its user experience with manual capture, focusing on ease of use, clarity, and satisfaction. To expedite testing, we conducted it in the office hallway, where despite initial hesitation, we engaged 16 participants.

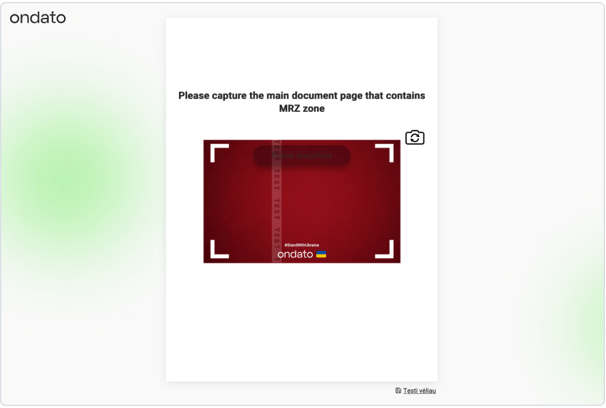

Initial auto capture version prepared by developers

User observations and SUS survey analysis

Participants were split into two groups to test manual and auto-capture in alternating sequences, minimizing order bias. They completed a System Usability Scale (SUS) questionnaire post-testing. During the tests, we observed their interactions and gathered verbal feedback. After SUS, we completed brief interviews and asked about their overall experience, preferred method, clarity, simplicity, and satisfaction with each capturing method.

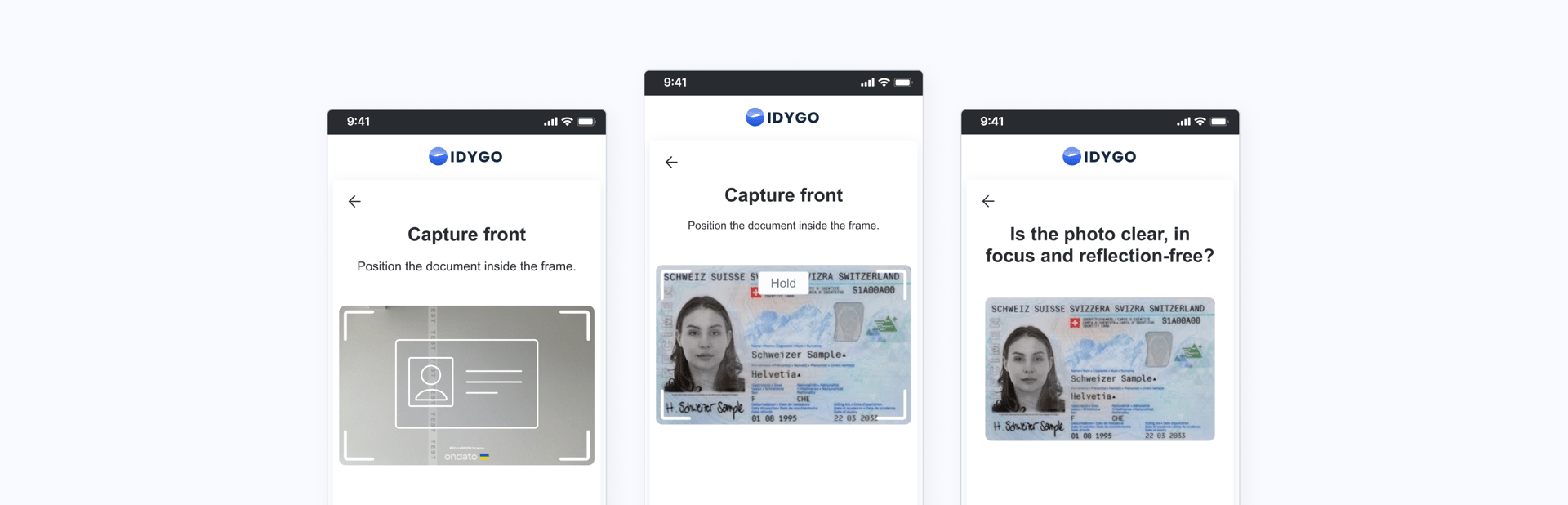

Examples of both provided document capturing methods

Findings from interviews and observations

Out of 16 participants, 10 preferred auto-capture.

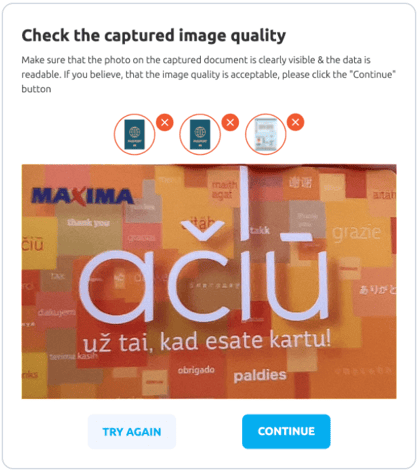

Modal to confirm a captured image result

Auto-capture feedback:

3 found it too fast, causing blurry pictures.

3 struggled with unclear instructions.

1 with shaky hands preferred manual capture for ease and speed.

Manual capture feedback:

3 desired document positioning feedback.

2 found pressing the capture button while holding the document inconvenient.

Other feedback:

1 participant misinterpreted the image result modal icons as poor quality indicators.

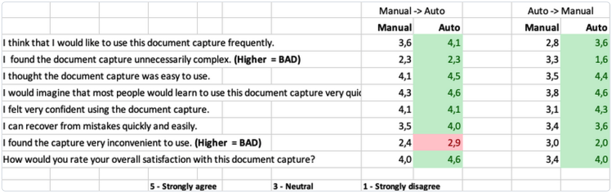

SUS questionnaire showed that users prefer auto-capture

The results showed users found auto-capture simpler and more user-friendly, likely to use it regularly, easier to learn, and believed it offered quicker recovery from mistakes, enhancing overall satisfaction.

Results of the SUS survey

Analysing competitor auto-capture solutions

After collecting feedback on the developers' auto-capture solution, I also examined competitors' solutions. This allowed me to gain insights into user interactions, identify common elements for auto-capture indication, and check how much information is provided.

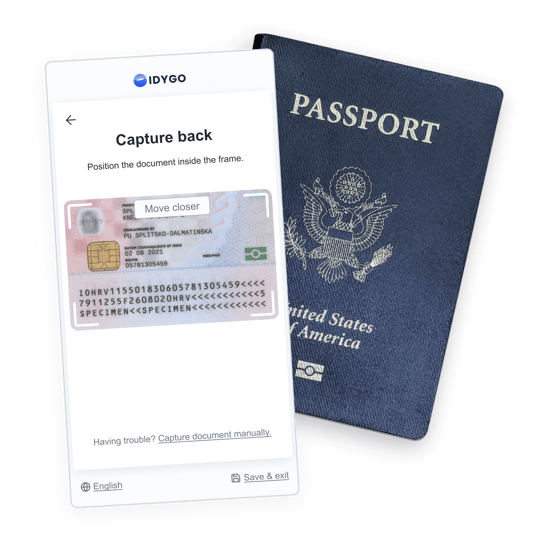

Examples of competitors in auto-capture

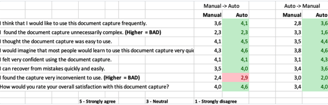

The feedback led to several improvements: slowed down the auto-capture speed and added frame movement for clarity. Inspired by competitors, I introduced a pre-capture animation, especially helpful for instructing users to flip cards. We replaced the confusing approval modal with a straightforward confirmation screen post-capture. Also, considering accessibility, manual capture remains as an option, with an 'Underlink' for manual capture appearing after 10 seconds for users struggling with auto-capture.

Document capturing movement

The final result of auto-capture

Conclusions & lessons learned

The information gathered indicated that while 62.5% of users preferred auto-capture, retaining manual capture was essential for usability. This project's success hinged on user research, iterative design, competitor analysis, leading to significant improvements in the auto-capture method. Key learnings include:

Speed isn't always the top priority for users; optimal pacing, especially in tasks like document capture, is crucial for perceived accuracy.

Broad focus on overall user interaction can spark ideas for additional enhancements, beyond the specific test area.

Pre-screening participants could be more effective, as approaching random individuals in hallways often led to rejections.